This Rice University Professor Developed Cancer-Detection Technology

Delivered by Trong Tung Nguyen on Thursday, December 8, 2022.

Trong Tung Nguyen, the lead contributor of the title article, recently gave a presentation at the 2022 Asian Conference on Computer Vision (ACCV) – a leading International Conference focusing on Computer Vision and Pattern Recognition, representing VinUni-Illinois Smart Health Center (VISHC) and his fellow contributors. VISHC is incredibly proud of Trong Tung, and wishes to introduce the article’s findings to our readers to celebrate this achievement and inspire further interest and research on smart health applications.

Pill Classification Task has been a heavily studied subject in healthtech. Recognizing different pills based on real-world images is essential to various healthcare applications, including assistance in identifying unknown pills using smart devices for caregivers and patients. The existing models of pill recognition are quite dependent on traditional machine learning systems, which are trained from a fixed set of data. While data storage could be vast, a problem arises when novel data arrives at the system. When this occurs, a common approach is to initialize an entirely new pool of data consisting of both old and novel data, which is then fed into the AI system. With 40 to 50 pills being approved every year, this method proves to be time-consuming, low-response, and inefficient.

Introducing Class Incremental Learning (CIL), a fundamental research topic in machine learning future. The general setting of CIL is that the disjoint sets of different classes arrive at the learning algorithm gradually. The system then learns incrementally from new classes that extend the existing model’s knowledge, while skipping the retraining process. This allows for a more flexible and effective memory training strategy.

We tackle the incoming novel class of pill recognition system with an incremental learning paradigm via a special modification. Conventional CIL allows the machine to learn data based on RGB images only, which regularly fails to distinguish similar pill images and recognize the novel pill input. The study therefore seeks domain-specific knowledge that can accompany RGB images to upgrade the classification system. In particular, it inspects several additional visual features of the pill (which is denoted “information stream”) that would aid the classification process. The study focuses on two questions: how would the information streams be incorporated or fused into the CIL system, and which combinations of fused streams would result in the best performance. The following sections will discuss the Multi-stream CIL Framework, and examine different fusion mechanisms in comparison with the traditional methods. The research team is the first to have discussed the capability of incremental learning in identifying pill categories, and hope to lay the foundation for future related research in machine learning abilities in healthcare.

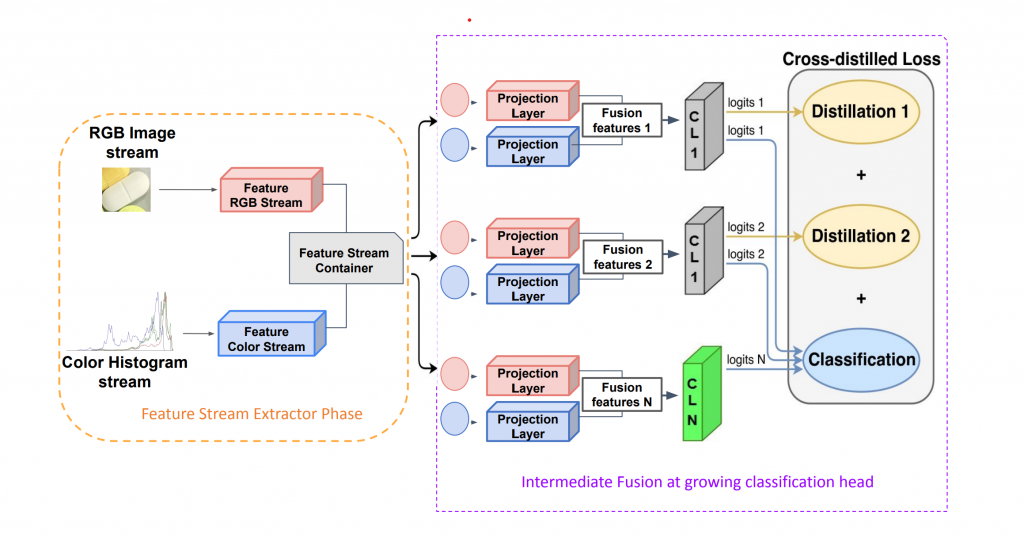

Unlike the traditional training paradigm with only single-stream information used by almost existing exemplar-based CIL methods, VISHC’s researchers proposed a new learning mechanism called CG-IMIF (Color Guidance with Multi-stream Intermediate Fusion) that uses an intermediate fusion framework to incorporate additional information streams. As shown in figure below, the initial framework has 3 components. The first element of the framework requires looking at the traditional single-stream learning paradigm, where it takes only the original RGB image stream as input. The second element features auxiliary additional streams such as color histogram, and edge images that would help the system with distinguishing information for hard examples. The third element is the placement of information streams in the incremental layer as a separate task.

The proposed architecture is complete and unique from past methods, which only depended on RGB images, featuring no additional streams, and may place streams prior to the incremental learning layer.

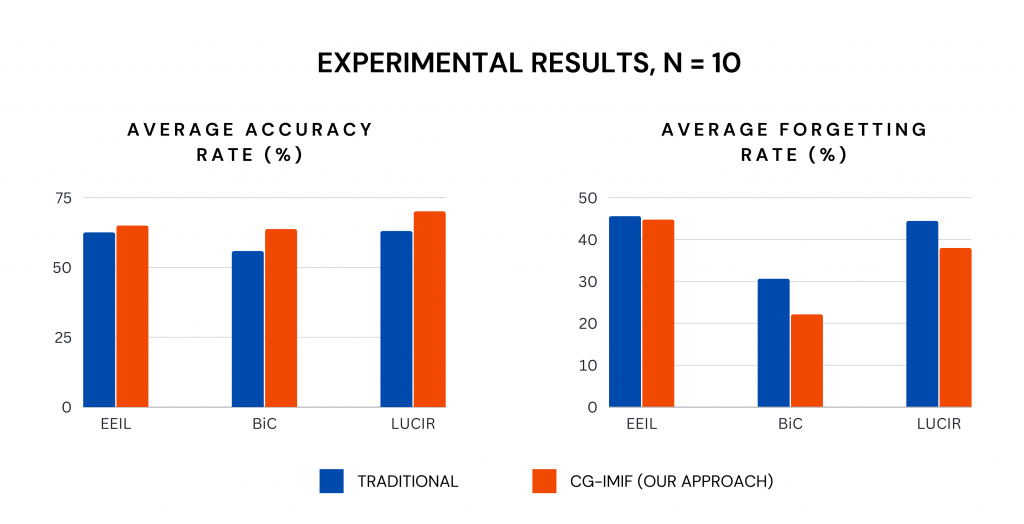

Experiments using VAIPE-Pill (a 7294-images dataset specifically designed to promote research on medicine recognition types) to test the Incremental accuracy and the Incremental Forgetting Rate of a specific fusion: Color Guidance with Multi-stream intermediate fusion (CG-IMIF) with other traditional methods. Further detail on the choice of this fusion technique is given in the study. The results are shown below.

The experimental results show favors for the CG-IMIF approach. The higher accuracy rates and lower forgetting rates show that the team’s approach is outperforming traditional methods. The same success displays when the number of task categories varies (N = 5; N = 15). CG-IMIF also proves to be the superior fusion technique and combination to early stage fusion.

The results introduce a tested novel framework for pill classification systems. The Incremental Multi-stream Intermediate Fusion (IMIF) could improve the performance of single stream CIL method by adding another information stream. This lays the groundwork for the improvement of any base exemplar-based approach that allows stream attachment. The team aims to expand to a more powerful but also challenging capability of the pill recognition system: incremental learning for detection problem. The team’s findings have contributed greatly to understanding the intelligence of machines, and advancing the smart health evolution.

“This work is a fundamental research in machine learning that aims to train a machine learning system to learn continuously from new data and knowledge. It opens up new opportunities to handle real-time medical data”

Assistant Professor Hieu Pham, co-author of the paper says.

“With this novel learning paradigm for pill classification system, I hope that my research could lay a foundation for further research on tackling open domain medical data. This would significantly contribute to our ambitious goal of delivering impactful research ideas towards a sustainable and state-of-the-art healthcare solution for people all around the world.”

Trong Tung Nguyen, Research Assistant at VISHC.

CG-IMIF is the result of a collaboration between researchers at VinUni-Illinois Smart Health Center, VinUniversity and International Research Center for Artificial Intelligence (BKAI), HUST. The key contributors to the work represented in this post include Trong Tung Nguyen, Hieu H. Pham, Phi Le Nguyen, Thanh Hung Nguyen, and Minh Do.

For full access to the research paper, use this link here.

Codes used in experiments are open for public use here.

Check out our poster for a holistic view of our research here.

BY NGOC PHAM | NEWS VISHC OFFICE

Ngoc Pham is currently a tech editor for AI in Medicine at VinUni-Illinois Smart Health Center. She looks for stories about how emerging technologies such as AI and Data Science are changing medicine and biomedical research.